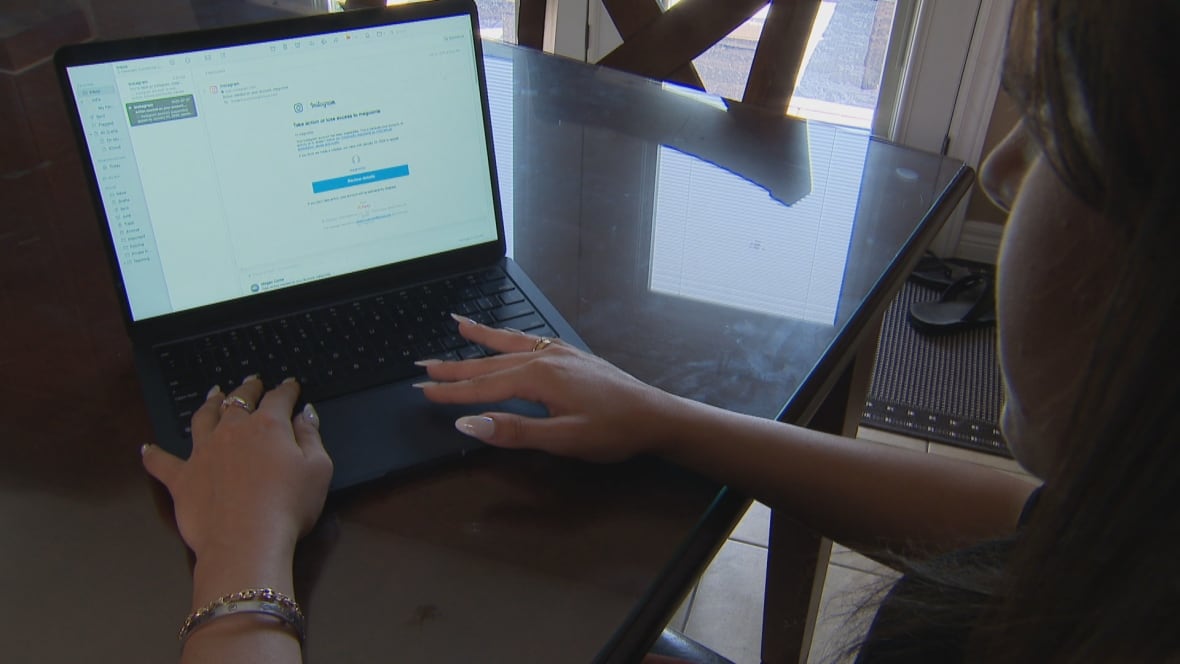

A teacher of high school history in Vaughan, Ontario, says she lost access to her Instagram account, and thousands of photos, conversations and personal memories, after the social networks platform mistakenly accused her of publishing material that, she said, represented “child sexual exploitation, abuse and nudity.”

Megan Conte says that it took days to reach a human in goal, who has Instagram, to declare his case. But that did not turn out that his account was reinstated, he said.

“When I read what they accused me, I was very injured. It surprised me a lot, especially considering what I do to make a living,” Toronto told CBC. “And there was no one who could contact, no human.”

Conte received an apology per finish, the Matrix of Instagram, Facebook, WhatsApp E Threads, and his account was unlocked hours after CBC Toronto contacted the company to ask about his complaints.

“We regret that we have been wrong and that you could not use Instagram for a while,” says a goal email to Conte. “Sometimes we need to take measures to keep our community safe.”

Conte said he is far from being alone in his concerns about arbitrary and difficult to reverse decisions taken by social networks moderators.

An online petition initiated by Brittany Watson of Peterborough, ontarium, complaining of excessive perceived dependence on the part of social networks in artificial intelligence tools instead of humans so far has obtained more than 34,000 names of people from all over the world.

Watson launched her campaign after she was also prohibited by goal in May, for reasons that, she said, are not yet clear. After two weeks, the ban was raised, he said.

“Social networks are no longer just social networks. It is now part of daily life,” Watson told CBC Toronto. “Now, they are removing it without any explanation.”

She said she is overwhelmed by the international response to her request. “People are so frustrated for that.”

Watson said that the objective of his request and a website of people who accompany him is to force more responsibility for social media sites. He would like to see Meta Re -wire his moderating tools to make the irregularities online more accurately.

“I think robots should be restored,” he said.

Both Watson and Conte say they have no evidence that AI is behind the wrong prohibitions and suspensions.

A target spokesman would not comment how much, or how little, the company’s social media suppliers trust AI to moderate the publications of the members.

But London’s technology expert, Ontario, Levy, says it would be physically impossible for the goal to use humans alone to moderate their platforms.

“With more than three billion regular users of these platforms, there is no way that goal can hire enough people in the world to cover everything that is published,” he said. “Automation is the only way they can make this scale.

“It is the automation that is executed.”

A target spokesman told CBC Toronto that the company uses a combination of people and technology to track the violations of its community standards. The company also said that it has not noticed an increase in the number of people whose accounts are suspended by error.

“We take measures on accounts that violate our policies, and people can appeal if they believe we have made a mistake,” said the company’s spokesman.

Conte’s problems began on July 26 when she received a message from a friend who pointed out that her Instagram account was inaccessible.

She logged in and discovered a goal note that said in part: “Her Instagram account has been suspended. This is because her account, or activity, does not follow the standards of our community about child sexual exploitation, abuse and nakedness.”

Even now, Conte said, he has no idea what the goal suspension triggered.

‘Beyond the inconvenience’

“The accusation is horrible, offensive and completely false,” Toronto told CBC. “I am a high school teacher and I am associated with such position … it has been traumatic and harmful.”

To do things even more confusing, he said, is the fact that he had not published anything in a couple of months.

“This goes beyond the inconvenience,” he said. “I lost about 15 years of conversations, memories, commercial contacts, creative work and social presence. Photos of loved ones, collaborations, messages with friends, everything was in an instant due to the decision of a machine.”

All lost content was restored when the suspension was raised.

Conte said that closing his account “feels like a kind of identity theft. It is emotionally exhausting and professionally disruptive.”

After several days of trying to navigate the process of target complaints without success, Conte said that he finally arrived, he believes, a human through the text messaging tool of the platform problem. But he could only access that function, he said, after paying a fee to verify his mother’s Instagram account.

Conte said he had the account of his verified mother because he believes that the appeals in the verified accounts are taken more seriously by Instagram.

However, after a text exchange that lasted more than an hour, it was not yet reinstated.

His account was only unlocked by goal last week, after the call of CBC Toronto to the company. At the same time, he received the apology.

Levy said that people who, as Conte, feel that they have been mistakenly suspended have few options.

“There is no legislation in the books that forces the company to use humans instead of artificial intelligence for this work,” he said. “You can do what you want.”